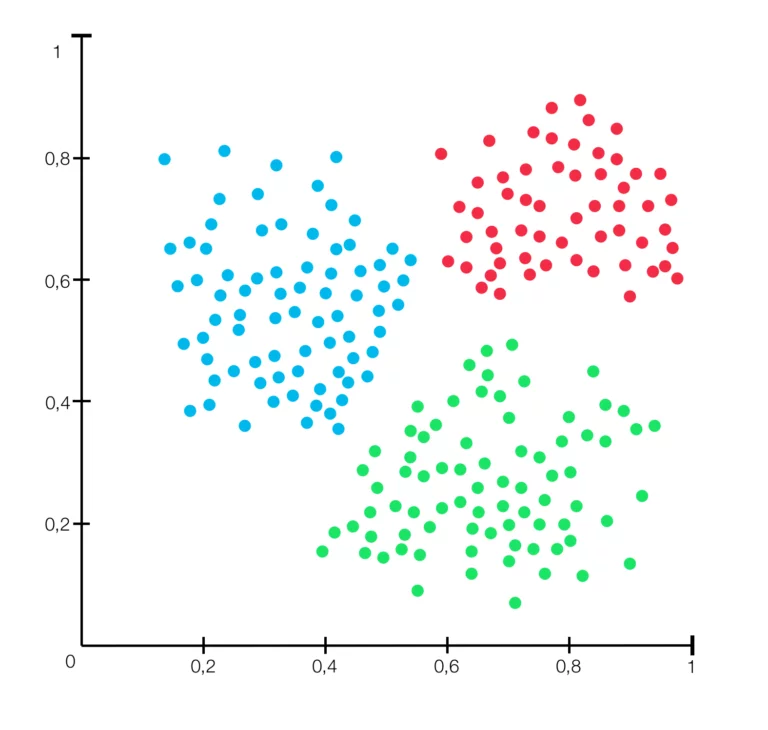

The clustering problem is the grouping of objects or data into clusters. In contrast to classification, they are not predetermined but result from the similarity of the different objects considered. Similarity means the spatial distance of the objects, represented as vectors. There are different methods to determine this distance, e.g. the Euclidean or Minkowski metrics. Already when determining the similarity one notices that there is no clear cluster determination.

Clustering Methods

There are different methods to classify the individual objects into clusters, each of which has its own advantages and disadvantages and achieves different results. In most cases, in order to obtain the best results for a data set, you have to try different methods with different parameters and then decide which one to use. There is no procedure that works best in general. Common unsupervised learning methods include:

Hierarchical Clustering

In hierarchical clustering, either the total amount of all objects (top-down) or the amount of all individual objects (bottom-up) is assumed as a cluster at the beginning. In the top-down process, the largest cluster is split by selecting the farthest object as the center of the cluster as a new cluster center. The algorithm then assigns all objects to the nearest cluster center. In the bottom-up procedure, the smallest and closest clusters are combined to form a new cluster. The difficulty with both methods is as such: on the one hand, how to determine the distance between the individual objects and, on the other hand, how far to divide or combine the clusters. After all, the process should not be repeated until as many clusters as objects or only one cluster remains.

Partitioning Clustering

In partitioning clustering, a number k is first defined, which specifies the number of clusters. The respective algorithm then determines k cluster percentages. For example, the K-Means algorithm, one of the most common partitioning clustering methods, randomly determines the cluster centers. The advantage of the partitioning method is that the assignment of the individual objects to a cluster can be changed during the finding of a cluster. On the other hand, the fact that the number of cluster centers is already defined at the beginning is a big disadvantage, since one has to execute the algorithm with different values and then select one in order to achieve optimal results.

Density-Based Clustering

In density-based clustering, the different clusters are determined by the density of the individual objects in space. The most popular of these methods is the DBSCAN algorithm. A distance ε together with a number k is determined. Objects that possess k neighboring objects with a distance smaller than ε together represent core objects. If two core objects are at a distance ε to each other, they are combined into a cluster. Objects that are not core objects but are close to a cluster are assigned to the cluster. The objects that belong to no cluster are considered noise objects.

Applications

Since clustering methods find abstract relationships in data that a person may not perceive as obvious, they are now used in many areas, such as in market analysis. In market analysis, clustering is widely used to evaluate data from surveys, for example. Here, customers are divided into general groups in order, for example, to enable better product placements. Clustering is also used in Natural Language Processing (NLP). Here they enable the automated evaluation of texts and language. Another interesting field in which clustering is used in crime analysis. Clustering allows the efficient deployment of police and security forces, as crime hot spots can be better identified.

Hurdles of Clustering

Clustering is very time-consuming for large amounts of data, especially if the room has several dimensions. Furthermore, the effectiveness of clustering is determined by the chosen method of distance measurement. As a rule, there is no generally correct result. This is due to the fact that the result is drastically different depending on the different methods with different parameters. This makes it difficult to interpret the result and check its correctness. Possibilities would be to carry out this examination, to compare the result of the clustering with known classifications or the evaluation by a human expert. However, both variants are only conditionally suitable, since clustering would not be necessary if there were already known classifications and human judgments are always very subjective.